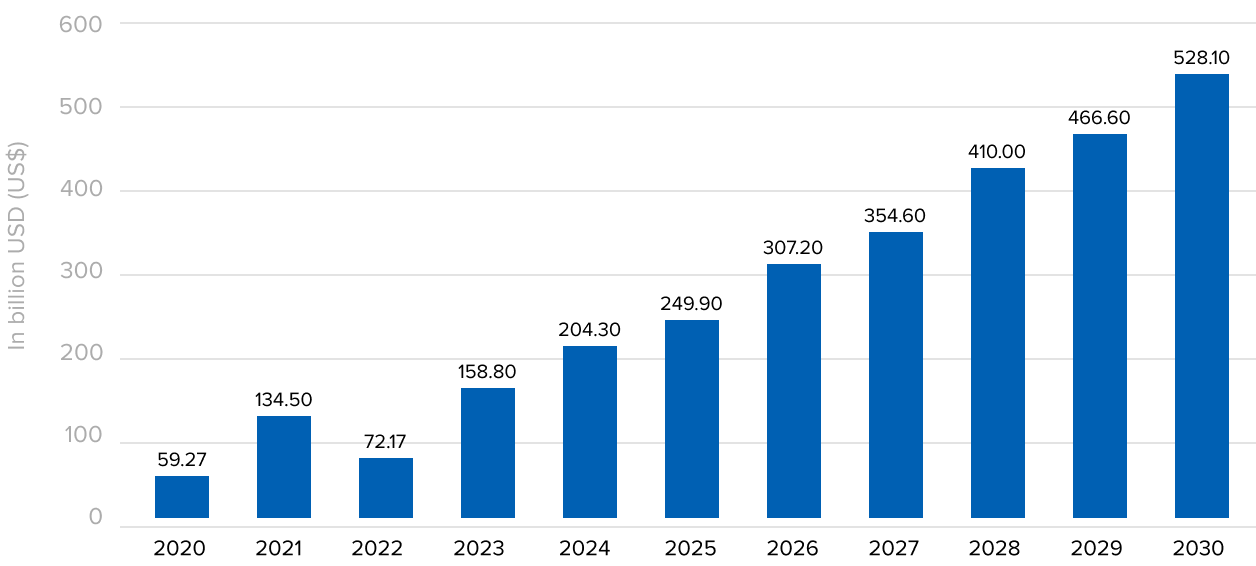

According to Statista Publications

- The market size in the Machine Learning market is projected to reach US$204.30bn in 2024.

- The market size is expected to show an annual growth rate (CAGR 2024-2030) of 17.15%, resulting in a market volume of US$528.10bn by 2030.

- In global comparison, the largest market size will be in the United States (US$70.63 billion in 2024)

Last year, we already told you about the trends and sentiments that the ML and AI revolutions will bring. Read our previous articles “ML Trends To Blow Your Mind” and “The Bomb of 2023: AI and ML Trends,”. Share your expectations and thoughts on the progress of Machine Learning.

After all, AI technologies are taking over new industries now and then, offering solutions that can help save the environment, make people’s lives easier, or automate processes and conserve resources.

We can’t predict exactly what will happen in a year and what new revolution ML and AI will cause. But we can try to consider the most likely scenarios and Explore the next wave of Machine Learning and Artificial Intelligence.

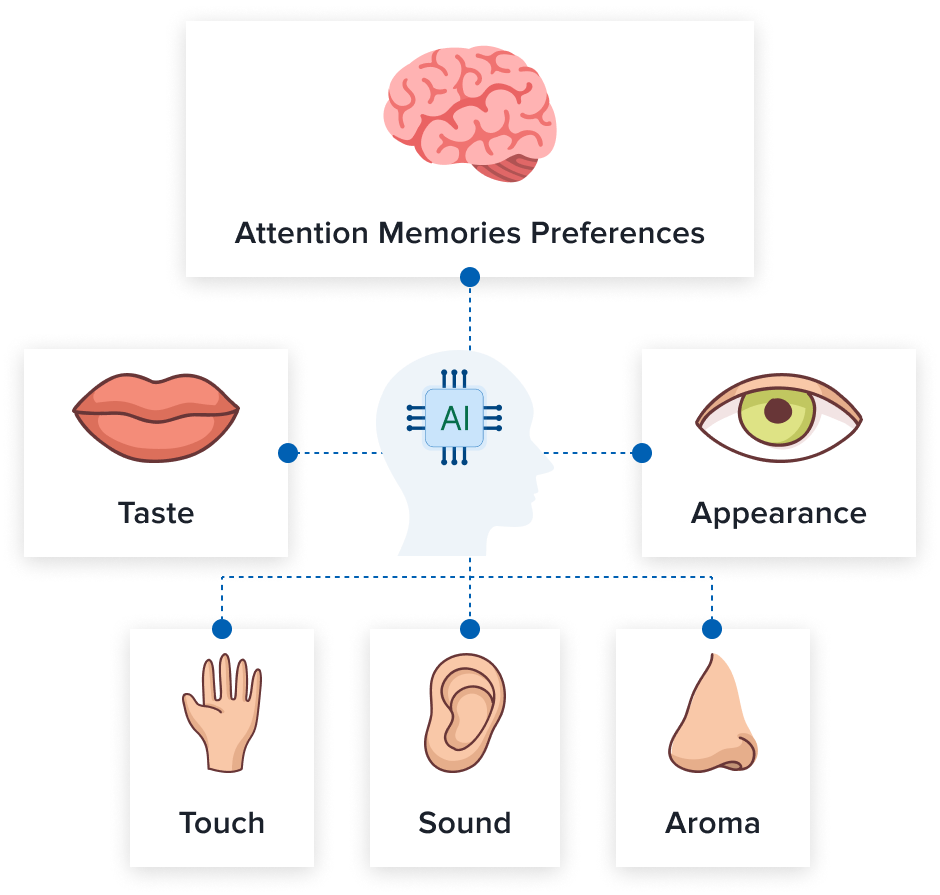

Multimodal AI

Multimodal artificial intelligence can become the next wave of Machine Learning. It`s cover different types of input such as text, images, video, or sound. This kind of technology goes beyond single-mode data processing and builds a bridge to processing sensory information, just like humans.

How does it work?

Multimodal AI generative systems typically rely on models that combine types of input such as images, video, audio, and words provided as a prompt. They are then converted into output data, which can also contain textual responses, images, video, and/or audio. Large amounts of text, images, video, or audio are used to train them. In doing so, models learn patterns and associations between text descriptions and corresponding images, videos, or audio recordings.

Back in November 2023 at EmTech MIT, Mark Chen, Head of Advanced Research at OpenAI, commented on this technology – “The interfaces of the world are multimodal. We want our models to see what we see and hear what we hear, and we want them to also create content that appeals to more than one of our senses.”

With its multimodal capabilities, the OpenAI GPT-4 can respond to visual and audio inputs. Such technologies open up completely new horizons and opportunities for almost any industry. For example, in the healthcare industry, multimodal AI has the potential to improve diagnostic accuracy by analyzing medical images, patient history, and genetic information.

“As our models get better and better at modeling language and start to reach the limits of what they can learn from language, we want to provide the models with input from the world so they can perceive the world on their own and draw their conclusions from things like video or audio data,” Chen said.

The Green AI Revolution

It’s no news that the world is moving towards greening, smart consumption, and resource conservation. This trend can be picked up by AI by introducing environmentally friendly solutions. We are currently witnessing the development of the Green AI trend. It is aimed at implementing environmentally friendly Machine Learning solutions. Thanks to this, AI systems become more energy efficient, optimize energy consumption, and also monitor and preserve the environment.

Examples of this include designing energy-efficient buildings and predicting climate-related events or optimizing environmental practices. The goal of Green AI is to optimize energy efficiency, reduce greenhouse gas emissions, and promote sustainable practices.

Companies engaged in environmental AI can simultaneously develop highly profitable programs and achieve net zero goals. According to PwC, the use of AI for the environment can bring the global economy $5.2 trillion in 2030 and reduce greenhouse gas emissions by 4% at the same time! Impressive, isn’t it?

Today, we are looking into a reality in which Artificial Intelligence can autonomously eliminate waste, optimize complex processes and reduce global emissions.

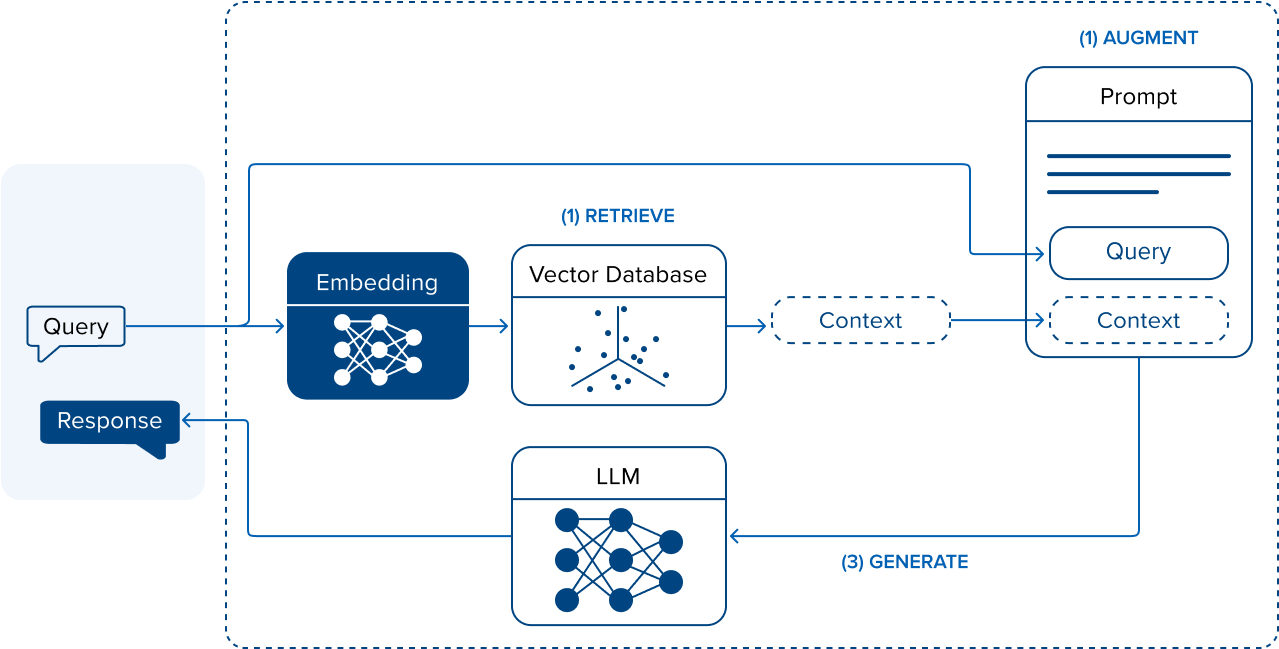

Retrieval-augmented Generation (RAG)

Generative intelligence took center stage in the tech world in 2023, making it a year filled with rapid advances in the capabilities of large-scale language models (LLMs). The year 2024 still holds the promise of great progress in the potential of large language models.

However, generative AI tools still have room for improvement, as they suffer from problems of hallucinations or incorrect answers to user queries. This is an obstacle to the introduction of generative tools in corporate technology, as such errors can be critical for business.

Retrieval-augmented generation (RAG) – allows you to develop hints at an intermediate stage between the user’s response and the generation of LLM output. This helps LLMs improve the accuracy and relevance of content and produce more accurate, contextually relevant answers. The user’s query is augmented with information and passed to the LLM.

In this way, RAG can complement and improve generative models while reducing the risk of errors

Autonomous Agents

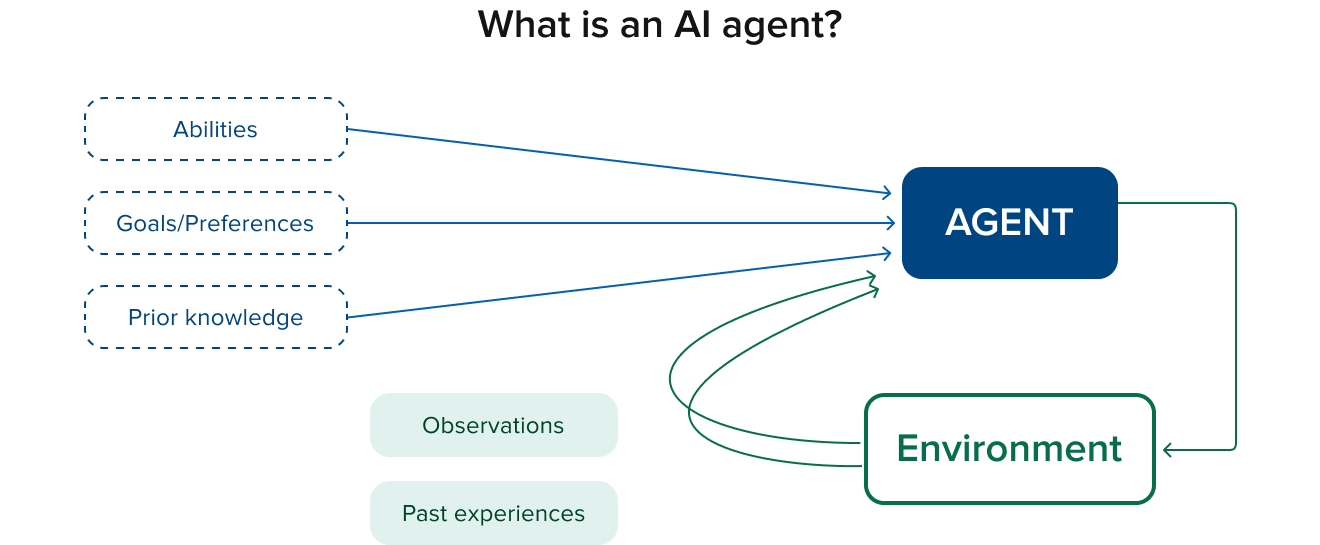

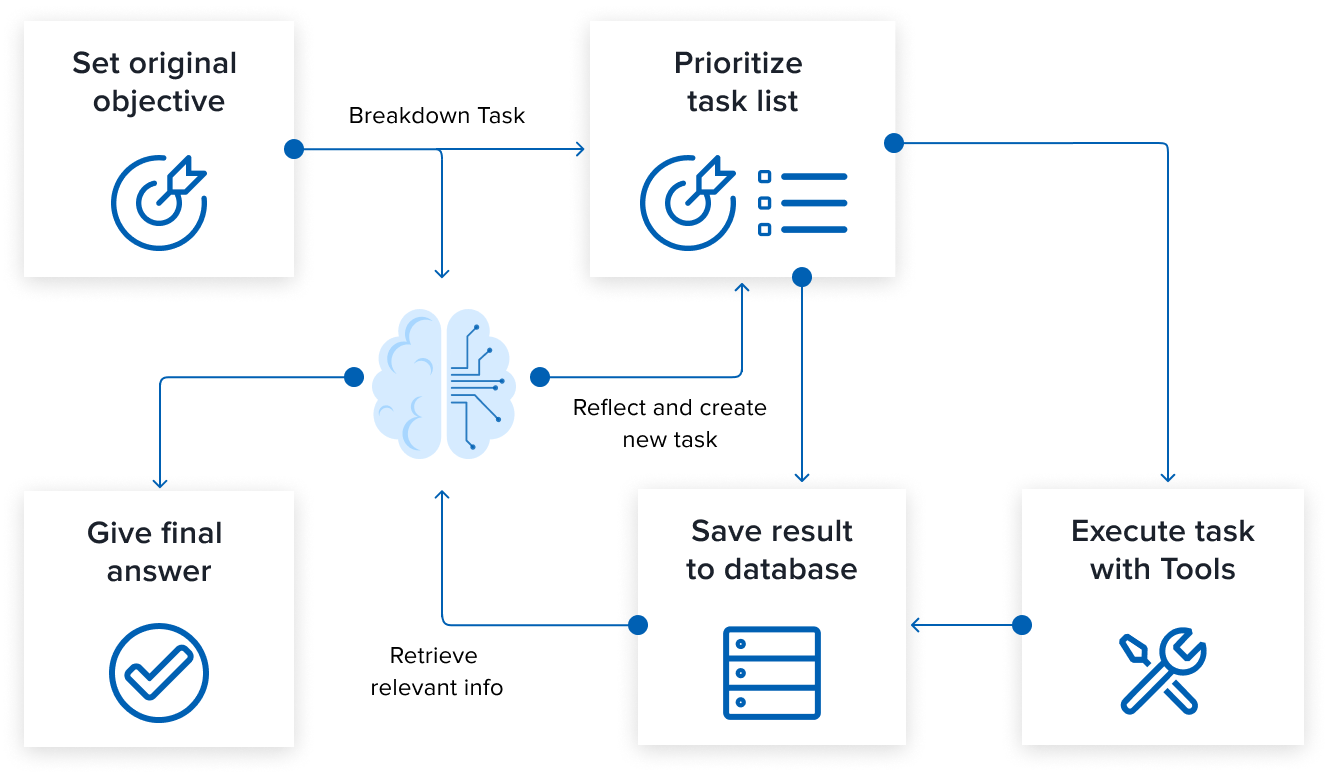

Agentic AI is a system that represents an innovative strategy for building generative AI models and marks a shift from reactive to proactive. They are autonomous programs and can act independently. At the same time, they are designed for a specific purpose. Unlike traditional artificial intelligence systems that follow predefined programming, autonomous agents can act without human intervention by analyzing their environment. Agents use data to learn and adapt to situations and events to be able to make decisions without human intervention.

Computer scientist Peter Norvig, a fellow at the Stanford Institute for Human Artificial Intelligence, wrote that “2023 was the year of being able to communicate with AI. In 2024, we will see the ability of agents to do everything for you. Making reservations, planning a trip, connecting to other services.”

This kind of technology will be useful in improving the customer experience through intelligent and responsive interaction. This will help to improve the customer experience and save costs by reducing human intervention.

Mark Chen, head of frontiers research at OpenAI said, “I think that multimodal together with GPTs will open up the no-code development of computer vision applications, just in the same way that prompting opened up the no-code development of a lot of text-based applications”.

Open-source AI

Large language models and other generative AI models require a lot of computation, which makes the process very expensive. However, the use of open-source models allows the modification and creation of existing code, thereby reducing costs and expanding AI access.

According to GitHub, in 2023, generative AI projects entered the top 10 most popular projects on the code hosting platform for the first time, and projects such as Stable Diffusion and AutoGPT attracted thousands of new contributors.

Open-generative AI models will continue to evolve in 2024 as the stage of the Next Wave of Machine Learning. According to some forecasts, they will be comparable to proprietary models. In 2023, Llama 2 70B, Mixtral-8x7B, and StableDiffusion became popular compared to proprietary models such as GPT-4, PaLM-2, and Midjourney.

Thus, 2024 may be the year when the gap between open-source and proprietary models will narrow. This will make it possible to deploy generative AI models in hybrid local environments.

Conclusion

For now, all predictions and expectations remain just that, predictions and expectations. However, we can say for sure that 2024 will be interesting to follow!

Share your predictions, and let the journey of next wave of Machine Learning begin!